What is the Token Limit for ChatGPT 3.5 and ChatGPT 4?

December 8, 2023

December 8, 2023

January 10, 2026

January 10, 2026

ChatGPT can handle large amounts of text. But every chat, prompt, and response still runs into a token limit.

These limits affect how much context a model can process, how long a response can be, and how well ChatGPT remembers earlier messages. The rules have also changed a lot since GPT-3.5 and early GPT-4.

In this guide, you’ll learn:

- What a ChatGPT token is and how token usage is measured

- How token limits differ across ChatGPT models and platforms

- The current token limits for GPT-3.5, GPT-4, GPT-4o, and GPT-4.1

- Practical ways to work within model limits

- When tools like Tactiq improve long-form workflows beyond token size

What is a ChatGPT Token?

A ChatGPT token is a unit of text that a language model processes.

One token can be:

- A single word

- Part of a word

- Punctuation or symbols

- Spaces or non-English characters

On average, one token equals about 0.75 words in English. This measurement is consistent across models.

Word count and token count are related, but not the same. Longer words, code, and non-English text often use more tokens than expected.

Tokens are used across the entire interaction:

- System message

- System prompt

- User input

- Model’s response

When the model’s token limit is reached, the model must shorten or stop its output.

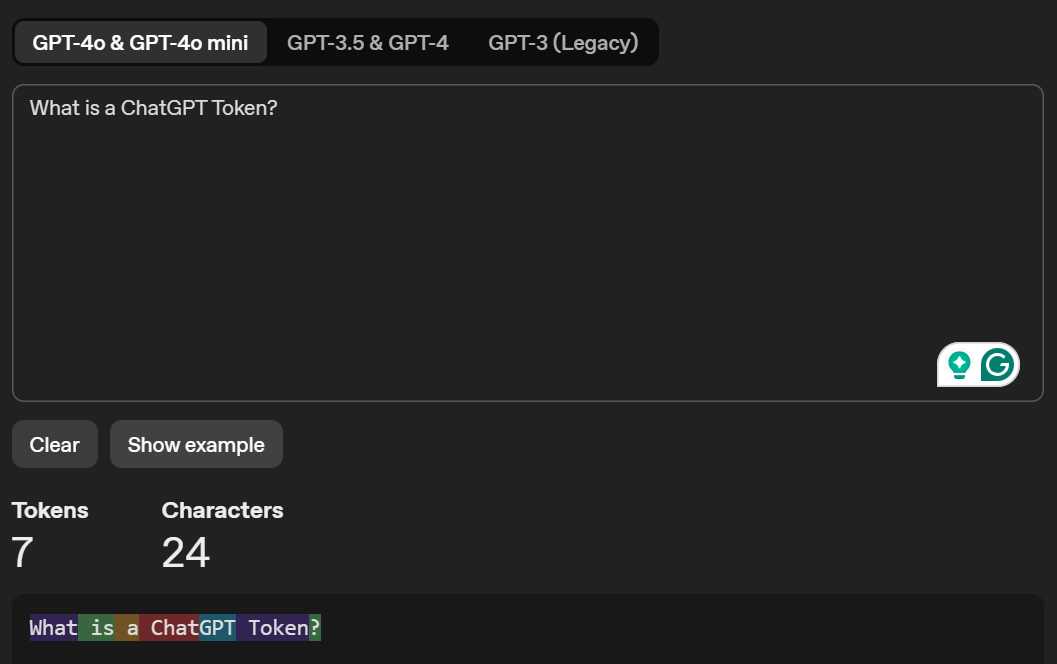

You can access OpenAI’s Tokenizer to visualize how its AI language model tokenizes a text and shows its total token count. Here’s sample text I entered on Tokenizer:

You can also learn more about free vs Paid ChatGPT token limits.

Pro Tip: Meeting transcripts often exceed 20,000 tokens, forcing you to chunk text or lose context in ChatGPT. Tactiq captures and summarizes meetings from Zoom, Google Meet, and Microsoft Teams automatically. No token limits or copy-pasting required.

What is the ChatGPT-3.5 Token Limit?

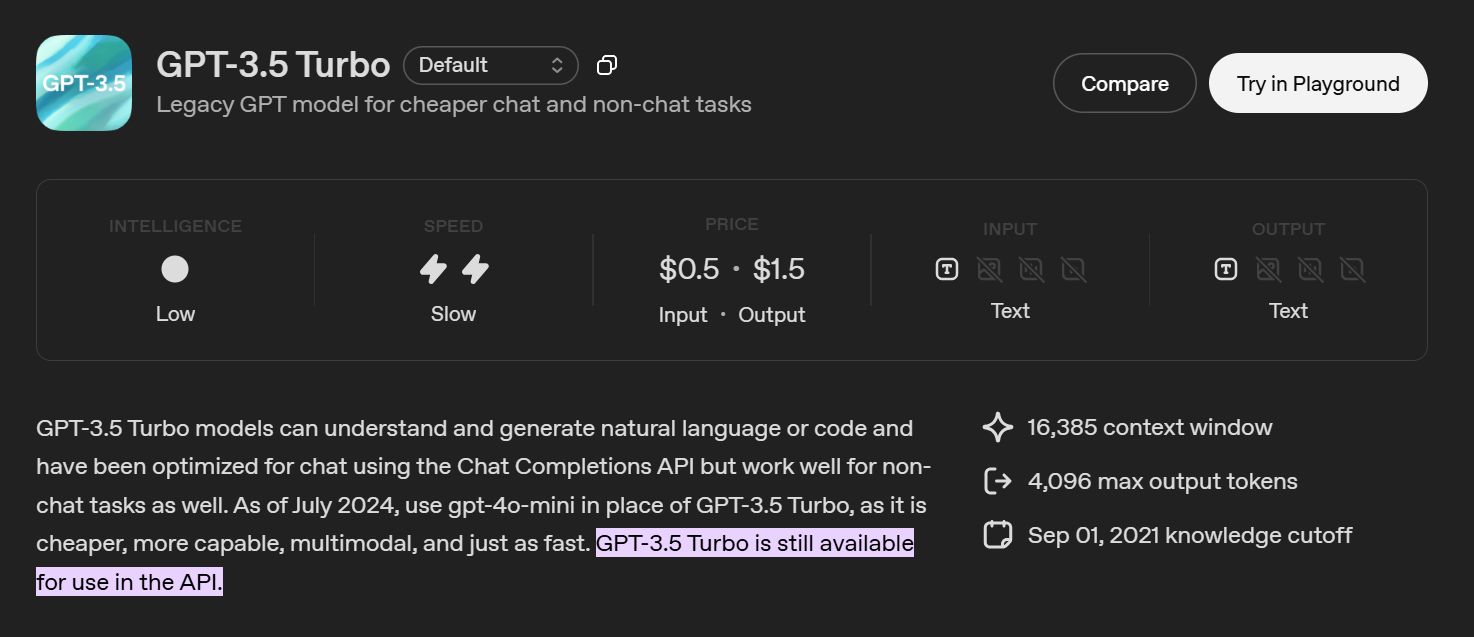

GPT-3.5 is now considered a legacy ChatGPT model. Most users no longer see it in the ChatGPT interface. It is mainly available through the ChatGPT API.

The standard gpt-3.5-turbo model supports:

- 16,385 tokens total per API request

- Tokens are shared across input, system message, conversation history, and output

- The model’s response must fit within the remaining token budget

A long prompt or large input reduces the maximum response size.

Where GPT-3.5 is still used

- API-based tools and scripts

- Cost-sensitive tasks

- Short prompts and simple chat flows

GPT-3.5 is not designed for long transcripts, large documents, or complex reasoning. Newer models handle larger context length and structured output more reliably.

What is the ChatGPT-4 Token Limit?

ChatGPT-4 does not have a fixed token limit. Limits vary by model and platform.

Legacy GPT-4

Mostly deprecated.

- Context length: ~8,192 tokens

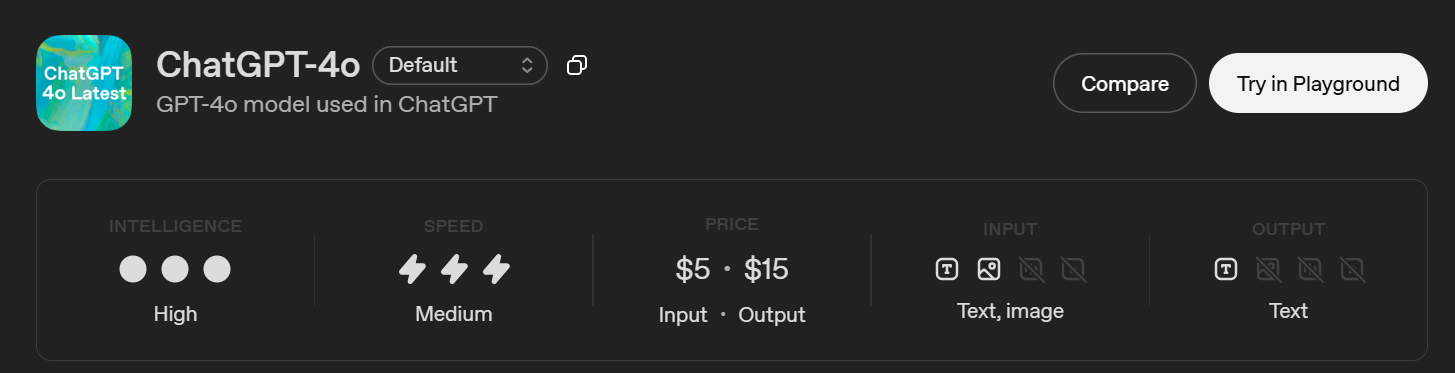

ChatGPT-4o (ChatGPT)

This is the GPT-4 model used in ChatGPT.

- Context window: 128,000 tokens

- Max output: 16,384 tokens

- Knowledge cutoff: October 1, 2023

ChatGPT still applies response caps, so the model may accept large input but return a shorter answer.

GPT-4.1 (API only)

Built for long-form and structured workloads.

- Context window: 1,047,576 tokens

- Max output: 32,768 tokens

- Knowledge cutoff: June 1, 2024

Key point

There is no single ChatGPT-4 token limit. Limits depend on:

- The ChatGPT model

- ChatGPT UI vs OpenAI API

- Context length vs output cap

How do ChatGPT Token Limits Work?

If you’ve used ChatGPT for longer sessions, you’ve probably noticed responses getting shorter over time. We’ve seen this happen after pasting long text, continuing a conversation, or asking follow-up questions on earlier answers.

This comes down to how token limits work.

Every chat shares a single token budget. That includes the system message, your prompts, the conversation history, and the model’s response. As the chat grows, more tokens are already used before ChatGPT even starts generating an answer.

Context length and response length are also different. A model might accept a large input, but still stop early when generating output. In most ChatGPT interfaces, response length is capped below the full context window.

That’s why long chats sometimes lose earlier details or cut off mid-answer. It’s not a bug. It’s the model reaching its limit.

Some users also explore browser-based tools like ChatGPT Atlas to organize long chats. These tools don't increase token limits, but they help manage conversations better.

What are the Differences between ChatGPT-3.5 and ChatGPT-4?

On paper, GPT-3.5 and GPT-4 both generate text. In practice, they behave very differently once you start working with longer inputs, complex tasks, or ongoing conversations.

We’ve felt this difference most clearly when moving from short prompts to real work, like reviewing transcripts or iterating on multi-step tasks.

Context window and output limits

GPT-3.5 Turbo supports a 16K context window, but its maximum output is capped at 4,096 tokens. That means long prompts quickly crowd out the space available for a response.

ChatGPT-4o, by contrast, supports a 128K context window and can generate responses up to 16,384 tokens. You can provide far more background without immediately hitting limits.

GPT-4.1 pushes this further in API use cases, with a 1M+ token context window and much higher output ceilings. This changes how you approach large documents and long conversation histories.

Capability and reasoning differences

GPT-3.5 is optimized for basic text and code generation. It works for simple tasks, but it struggles with:

- Long instructions

- Multi-step logic

- Maintaining consistency across a conversation

GPT-4-class models handle these better. We’ve found they:

- Follow instructions more closely

- Lose context less often

- Produce more structured and coherent responses

This isn’t just about tokens. The underlying architecture and training data differ, which reduces hallucinations and improves reasoning accuracy.

Modalities and features

GPT-3.5 is text-only and supports a limited feature set.

ChatGPT-4o supports:

- Text and image input

- Larger context handling

- Better performance in chat-based workflows

GPT-4.1 adds support for advanced API features like tool calling, structured outputs, and higher-volume processing, which makes it better suited for production systems.

Cost and recommended use

GPT-3.5 is cheaper per token and still appears in cost-sensitive API tasks. That said, OpenAI now recommends newer models for most use cases.

In real workflows, GPT-4-class models are more reliable once prompts grow beyond a few paragraphs or tasks require sustained context.

The real takeaway

The difference between GPT-3.5 and GPT-4 isn’t just how many tokens they support.

It’s how well they:

- Manage context

- Follow instructions

- Handle long inputs without breaking down

Tokens matter, but they’re only part of the story.

How to Get Around Token Limits

Token limits are less restrictive than they used to be. But they still shape how much a model can process and return in one response. In practice, the right approach depends on model choice, prompt structure, and how you manage context over time.

Use models with larger context windows

If you have access, start with models designed for long context.

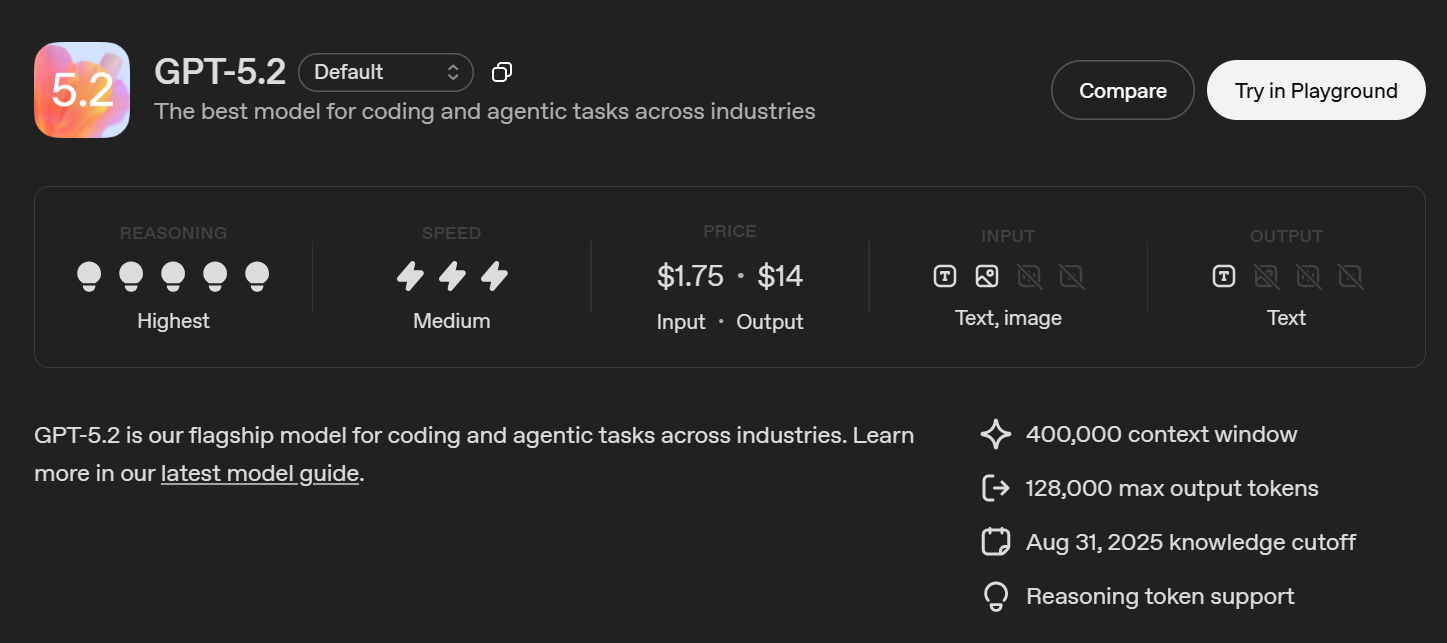

Newer models like GPT-5, GPT-4o, and GPT-4.1 support much larger context windows than earlier generations. This reduces how often you need to trim input or restart a conversation when working with long text or ongoing tasks.

That said, the larger context does not remove all limits.

Output is still capped

Even when a model can accept large input, the maximum output tokens may be far smaller. This usually shows up when a response ends early or feels incomplete.

When that happens:

- Ask the model to continue in a follow-up message

- Request a summary instead of a full reproduction

- Break the task into multiple steps

This helps keep each response within the model’s output limit.

Chunk large inputs intentionally

Chunking is still useful, even with modern models.

We typically split long content by:

- Sections

- Topics

- Time ranges in transcripts

Each chunk is processed separately, then combined or summarized. This reduces token pressure and keeps responses more focused.

Use summaries to preserve context

Instead of carrying the full conversation history forward, summarize earlier messages and reuse that summary as input.

This keeps context compact while retaining key details. It’s especially helpful in long chats and multi-step workflows.

API best practices

If you’re working through the API:

- Set max_tokens for each API call

- Stream responses to avoid large single outputs

- Maintain the state manually instead of resending the full conversation history

Even with GPT-5 and extended context models, thoughtful token usage still matters.

Simplify complex questions

Long explanations and full transcripts use tokens fast. We’ve run into this often with meeting notes.

Instead of pasting everything, focus on the essentials. For example:

“Summarize the main decisions and action items in this conversation.”

This reduces input size and leads to clearer responses.

When workarounds fall short

Even with models like GPT-5 or GPT-4.1, summarizing and chunking still take effort. At some point, the issue stops being token limits and becomes workflow overhead.

That’s where tools built for organizing long transcripts and outputs start to matter.

How Tactiq Handles Long Transcripts Beyond ChatGPT Token Limits

Large context windows help. But when work involves long transcripts and recurring meetings, the challenge is less about tokens and more about structure.

Tactiq is an AI meeting transcription and note-taking tool designed for online meetings. It captures live transcripts and turns them into summaries, action items, and searchable notes.

Tactiq works with Zoom, Microsoft Teams, and Google Meet. You can get started for free, with no paid subscription required.

Key benefits:

- Captures full meeting transcripts without manual copying

- Structures notes into summaries and action items

- Keeps conversations searchable after the meeting

- Reduces prompt writing and context management

- Works well for recurring meetings and long discussions

Even with extended context models like GPT-5 or GPT-4.1, organizing long conversations still takes effort. Tactiq focuses on turning meeting content into usable outputs, not just holding more context.

Install the free Tactiq Chrome Extension to capture, organize, and reuse meeting insights without worrying about token limits.

Wrapping Up

Token limits still matter, even as models support larger context windows. They shape how much input a model can process, how long a response can be, and how reliably context is carried across a conversation.

Newer models like GPT-4o, GPT-4.1, and GPT-5 reduce friction, but they don’t remove it. Long transcripts, repeated meetings, and follow-up work still require structure, not just more tokens.

ChatGPT works well for generating responses. For meeting-heavy workflows, tools like Tactiq focus on turning conversations into summaries, action items, and searchable notes that remain useful after the chat ends.

If you regularly work with long-form meeting content, combining large-context models with a purpose-built tool can save time and reduce manual overhead.

{{rt_cta_ai-convenience}}

FAQs About the Token Limit for ChatGPT 3.5 and ChatGPT 4

What is the token limit for ChatGPT-4?

There is no single limit. ChatGPT-4 token limits depend on the model and platform. ChatGPT-4o supports a 128,000-token context window with up to 16,384 output tokens, while API models like GPT-4.1 support much larger limits.

What is the difference between GPT-3.5 and GPT-4o?

GPT-3.5 handles short prompts and basic tasks but has a smaller context and lower reasoning ability. GPT-4o supports much larger context, stronger reasoning, and image input, making it better for long conversations and complex tasks.

What is the token limit for GPT-4o?

GPT-4o supports a 128,000 token context window and up to 16,384 output tokens. Input and output tokens share the same budget, and ChatGPT UI response caps may apply.

Does GPT-4 still have a limit?

Yes. All GPT models have limits. Even with large context windows, models still cap maximum output size and total tokens per request based on the model and access method.

How many tokens can ChatGPT-3.5 handle?

GPT-3.5 Turbo supports 16,385 tokens total per API request, with a maximum output of 4,096 tokens. It is considered a legacy model and is mainly used for lightweight or cost-sensitive tasks.

A ChatGPT token is a small chunk of text—like a word, part of a word, or punctuation—that the AI uses to process your input and generate responses. Understanding tokens helps you craft prompts that fit within ChatGPT’s memory limits, so you get more complete and useful answers.

ChatGPT-3.5 has a 4,096-token limit, while ChatGPT-4 allows up to 8,192 tokens per conversation. If your prompt and response together exceed these limits, ChatGPT may forget earlier context or shorten its answers, which can disrupt your workflow.

You can draft clear, concise prompts, set a length limit for responses, and summarize complex questions to reduce token usage. These strategies help you get more detailed and relevant answers without losing important information.

Tactiq offers unlimited AI credits for transcriptions and summaries, so you don’t have to worry about token limits. You can capture, summarize, and interact with your meeting transcripts efficiently, saving time and ensuring you never miss key details.

With Tactiq, you get automated meeting notes, customizable AI Meeting Kits, action item tracking, and the ability to ask questions directly from your transcripts. This means you spend less time on manual note-taking and more time acting on insights, making your meetings more productive.

Want the convenience of AI summaries?

Try Tactiq for your upcoming meeting.

Want the convenience of AI summaries?

Try Tactiq for your upcoming meeting.

Want the convenience of AI summaries?

Try Tactiq for your upcoming meeting.